2026 AI Security Predictions: What Vendors and Researchers Are Forecasting

A distilled summary of 2026 AI security consensus from leading vendors—the attack vectors and threats most organizations will face.

Read more

From Chatbots to Cyber Weapons: What OpenAI’s ‘High-Risk’ Warning Really Means for Security Teams

OpenAI just acknowledged that its next-generation models are likely to pose a “high” cybersecurity risk. This post breaks down what that really means: LLMs as dual-use cyber infrastructure, the offense–defense balance, and how to architect your AI stack under an assumed high-risk model.

Read more

Phish‑mas 2025: How AI Is Supercharging Holiday Scams

AI is quietly turning Black Friday, Christmas, and year-end shopping into peak season for highly targeted scams, fake stores, and account takeover fraud. Here’s how the new wave of AI‑enabled holiday scams works — and what defenders and consumers can do about it.

Read more

How Graph Fibrations Revolutionize Non-Human Identity Management in Modern Clouds

Exploring a novel mathematical approach with graph fibrations to tame the explosion of non-human identities and permissions in cloud environments.

Read more

Shai-Hulud 2.0: NPM Supply Chain Attacks Highlight Risks Beneath AI

A critical look at the Shai-Hulud 2.0 malware campaign and how traditional software supply-chain threats undermine the foundations of AI platforms.

Read more

Runtime Guardrails for LLMs and Agentic Systems in 2025

Explore how runtime guardrails protect large language models and agentic AI systems from prompt injections, jailbreaks, data leaks, and tool misuse with leading vendor solutions.

Read more

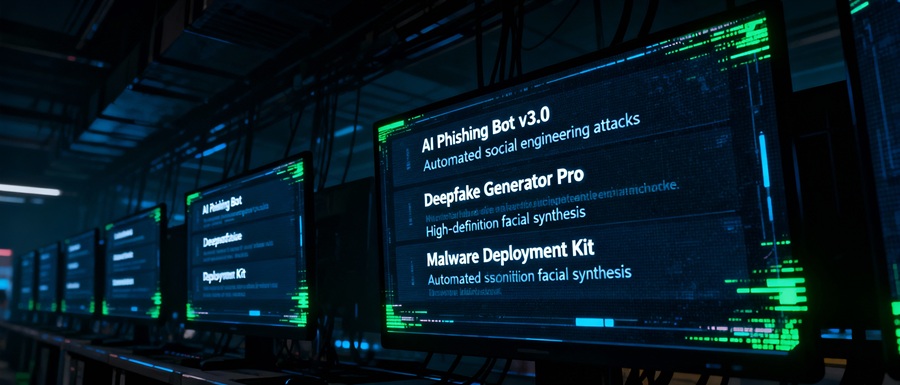

Cybercrime-as-a-Service: AI Tools on the Dark Web in 2025

How AI-powered toolkits are revolutionizing cybercrime — making scalable attacks, deepfake fraud, and adaptive malware in reach of anyone, and what defenders can do to respond.

Read more

Sandboxed AI Intelligence: Why Secure AI Labs Are a Game-Changer for Safe Innovation

How secure sandbox environments are transforming AI engineering by making experimentation safer and security assessments sharper.

Read more

State of Deepfake Prevention Technology: Trends, Challenges, and Detection Approaches in 2025

An overview of current deepfake detection technologies, industry challenges, and best practices for defending against synthetic media fraud.

Read more