AI Is an Amplifier, Not a Fixer: When Transformation Becomes a Stress Test

A concise, opinionated look at how AI adoption in security acts as a stress test that amplifies existing weaknesses in data, systems, and processes rather than magically fixing them.

Read more

If You're Going to Run OpenClaw, Do It Like This! (or Don't Do It at All!)

A security‑first walkthrough for installing, hosting, and testing OpenClaw without handing it the keys to your life.

Read more

The Invisible Threat: Why Backdoor Weights in Transformer Models Are Impossible to Detect

Modern transformer models ship with billions of opaque parameters and undisclosed training data. This post explains why backdoor weights are effectively impossible to verify and why runtime guardrails are mandatory even if you sanitize prompts.

Read more

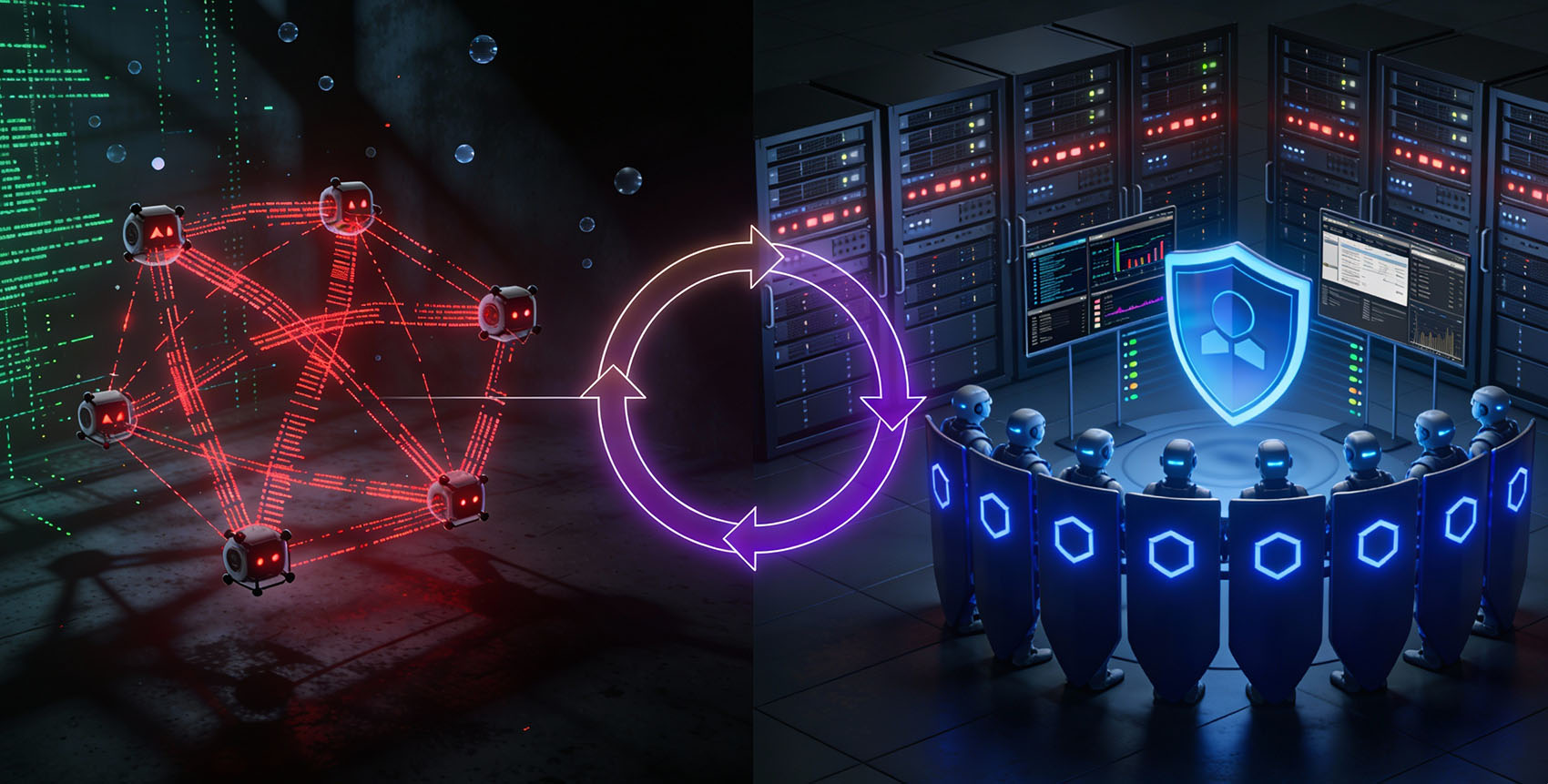

The Ralph Loop: How Agentic Automation is Reshaping Both Malware Development and Cyber Defense

The Ralph Loop pattern is accelerating both malware development and cyber defense. This post unpacks how agentic automation is being weaponized by threat actors, and how security teams can adopt the same architecture—Ralph-style loops, guardrails, and agentic orchestration—to keep pace.

Read more

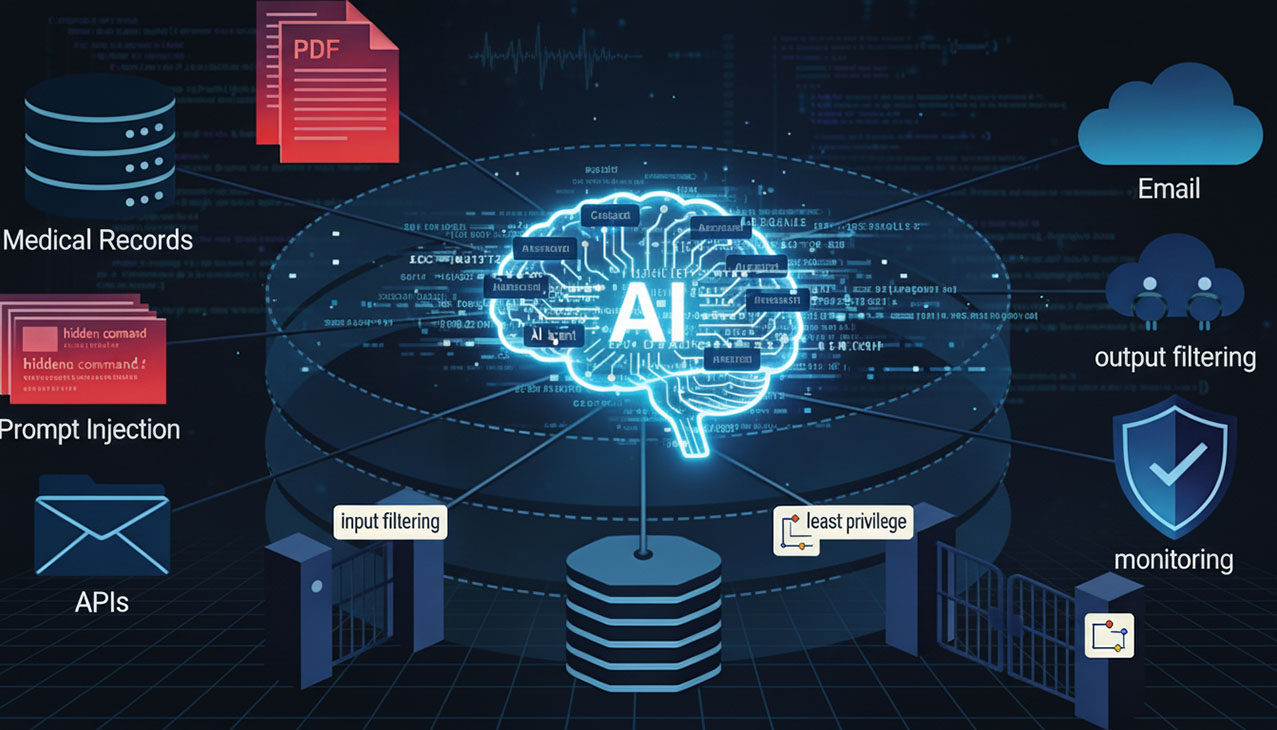

Agentic AI as an Attack Surface: Why LLMs Need Containment, Not Trust

Agentic AI systems are quietly turning every connected system into a larger attack surface. This post breaks down direct and indirect prompt injection and the concrete patterns security teams should enforce: containment, input/output filtering, least privilege, and zero trust for agents.

Read more

2026 AI Security Predictions: What Vendors and Researchers Are Forecasting

A distilled summary of 2026 AI security consensus from leading vendors—the attack vectors and threats most organizations will face.

Read more

From Chatbots to Cyber Weapons: What OpenAI’s ‘High-Risk’ Warning Really Means for Security Teams

OpenAI just acknowledged that its next-generation models are likely to pose a “high” cybersecurity risk. This post breaks down what that really means: LLMs as dual-use cyber infrastructure, the offense–defense balance, and how to architect your AI stack under an assumed high-risk model.

Read more

Phish‑mas 2025: How AI Is Supercharging Holiday Scams

AI is quietly turning Black Friday, Christmas, and year-end shopping into peak season for highly targeted scams, fake stores, and account takeover fraud. Here’s how the new wave of AI‑enabled holiday scams works — and what defenders and consumers can do about it.

Read more

How Graph Fibrations Revolutionize Non-Human Identity Management in Modern Clouds

Exploring a novel mathematical approach with graph fibrations to tame the explosion of non-human identities and permissions in cloud environments.

Read more