The Ralph Loop: How Agentic Automation is Reshaping Both Malware Development and Cyber Defense

Audio Podcast Version

The Ralph Loop: How Agentic Automation is Reshaping Both Malware Development and Cyber Defense • 4:25

Introduction

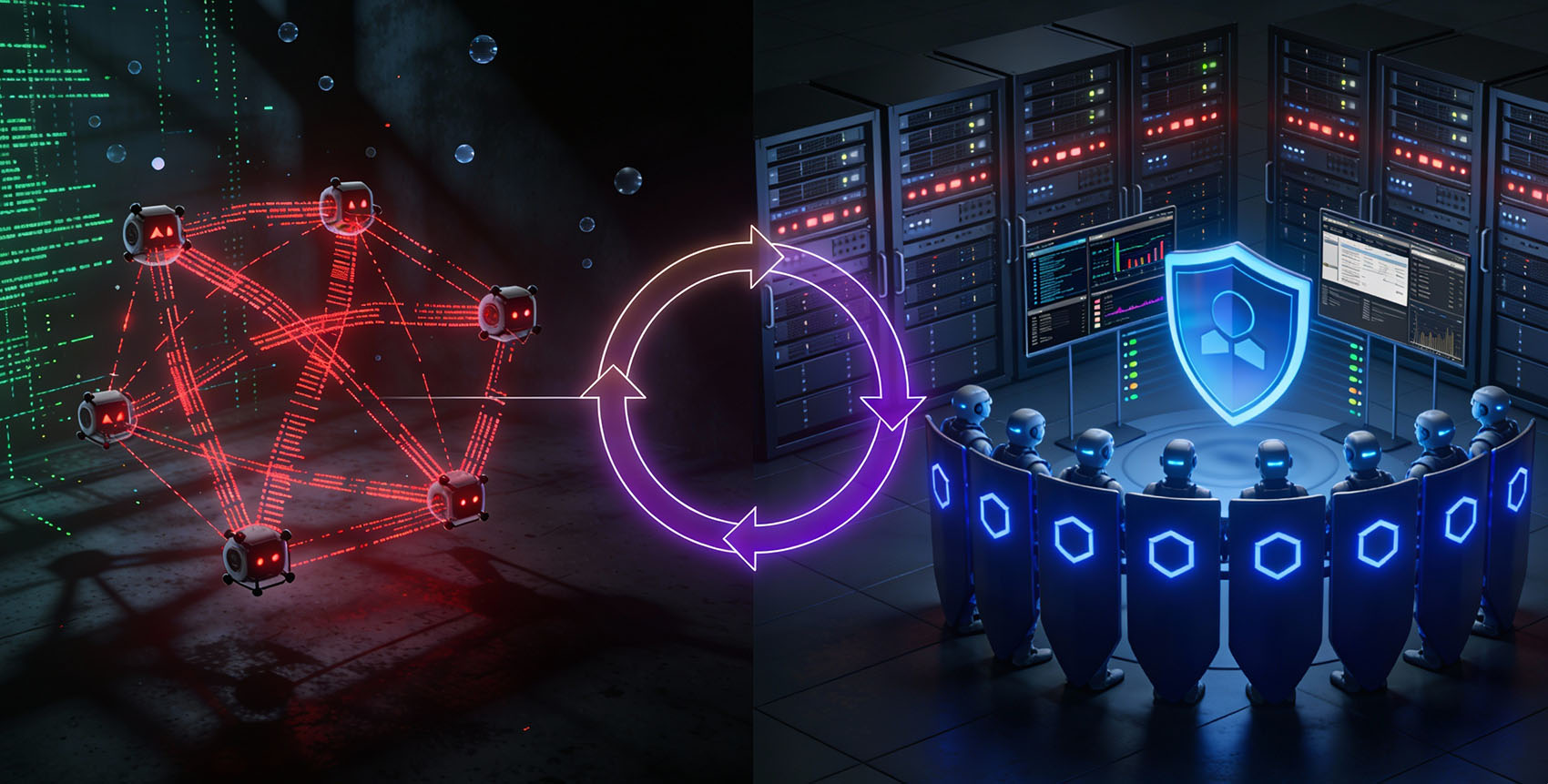

The Ralph Loop, a deterministic agentic pattern that cycles AI coding agents through tightly controlled iterations—has become a force multiplier for software automation. Its file-based, iterative architecture is particularly well-suited to modular malware development, while security teams are beginning to use the same orchestration patterns for autonomous defense. This post explores the dual-use nature of Ralph-style loops, how attackers are exploiting them, and how defenders can respond with equivalent automation.

Understanding the Ralph Loop

The Ralph Loop represents a shift from long, stateful chats to short, stateless iterations over a persistent filesystem. Each agent invocation starts from a clean context and reads:

- A stable specification (for example, a PRD or task file).

- State files that track progress and guardrails.

- The current code or artifacts in the working directory (linearb+1).

A typical loop looks like:

- Iteration N: Agent reads spec + state → does one task → updates files → exits.

- Iteration N+1: Fresh agent reads updated state → does the next task → updates files → exits.

By anchoring memory in files instead of conversation history, Ralph avoids context rot—where LLMs lose track of constraints as tokens accumulate. It also naturally enforces modularity: each iteration focuses on a single, well-scoped change (youtube, linkedin, linearb).

Why This Pattern Fits Malware Development

Malware development is inherently modular: operators assemble persistence, C2, delivery, and evasion components into frameworks. Ralph’s design—small, repeatable steps against a shared filesystem—maps directly onto this workflow (youtube, research.checkpoint).

The VoidLink Linux framework is an early example of AI-accelerated malware at scale. Researchers reported roughly 88k lines of code produced in days, using spec-driven decomposition that strongly resembles a Ralph-style loop even if not labeled as such. Complex requirements were broken into small tasks and executed sequentially with AI assistance (research.checkpoint+1, thehackernews+1).

From AI helpers to AI-native malware

Threat actors have moved beyond using LLMs as occasional coding aids. Google’s AI threat reporting highlights “Level 2–3” usage, where:

- AI generates and debugs code, including evasion logic.

- Malware calls AI APIs during runtime to mutate or obfuscate itself (cloud.google+1).

PROMPTFLUX is a canonical example: a VBScript dropper that uses an AI API to regularly rewrite its own code while preserving behavior, effectively embedding self-regeneration and making static signatures brittle (cybersecuritydive+1).

Ralph Loops as a Threat Multiplier

When the Ralph pattern is applied deliberately to offensive development, several capabilities stand out.

Parallel and scalable development

Attackers can run multiple loops at once—one iterating on persistence, another refining network protocols, another testing evasion. This “spaghetti base in factorial” dynamic lets a small team (or sole operator) build and harden a full framework at a pace that previously required organized groups (linearb).

Automated hardening and red teaming

Review or “refinement” phases can be weaponized. A dedicated “red team agent” can:

- Test each build in a lab against EDR and AV.

- Detect which behaviors trigger alerts.

- Feed precise instructions back into the loop to evade those controls (aihero, youtube).

Over many iterations, this behaves like a continuous adversarial training pipeline, but aimed at improving malware rather than defenses.

Segmented context for OPSEC

Because each iteration runs statelessly and reads only from disk, operators can isolate campaigns at the file-system level. Loops for different customers or targets can be separated into distinct directories and specifications, limiting cross-contamination if one environment is compromised (dev+1).

Speed and volume

The main change is economic. With AI-driven decomposition and automation, a handful of operators can produce customized, high-entropy malware variants far faster than traditional reverse engineering and response workflows can keep up. VoidLink’s rapid growth and similar campaigns show how quickly a framework can reach industrial scale once a loop is in place (thehackernews+1, sonatype, trendmicro).

Defensive Countermoves: Using Agentic AI for Defense

The same properties that make Ralph so attractive to attackers repeatability, modularity, and spec-driven iteration—also make it powerful for defenders. Agentic SOCs are starting to mirror this pattern for detection and response (scworld+1, redcanary+1, reliaquest).

SOC workflows as loops

A Ralph-style SOC loop might look like:

- Detection agent: triages alerts, enriches with context, and classifies severity.

- Investigation agent: gathers related telemetry, runs playbook steps, and summarizes findings.

- Containment agent: executes predefined actions (isolate host, revoke tokens, block indicators).

- Orchestrator: maintains state, enforces guardrails, and routes edge cases to human analysts (reliaquest).

Rather than a monolithic “AI SOC assistant,” you get a series of narrow, auditable agents operating over a shared incident state.

Scoping autonomy with the AWS matrix

To prevent overreach, organizations can align their loops with AWS’s Agentic AI Security Scoping Matrix (aws.amazon+1):

- Scope 1–2: Read-only or approval-gated actions (loop proposes, human disposes).

- Scope 3: Autonomous actions under strict guardrails, with real-time monitoring.

- Scope 4: Largely self-directed agents with strategic oversight only.

Higher scopes demand stronger runtime checks, behavioral authorization, and continuous validation to avoid capability drift (aws.amazon).

Automated malware analysis and red teaming

Defensive loops shine when they are aimed at the same problems attackers are automating:

- Sandbox agents: detonate samples, record behavior, and tag campaigns in near real time.

- Red team agents: continuously probe controls with generated exploits or misconfigurations.

- Fusion agents: correlate intel (blogs, CVEs, telemetry) and kick off remediation workflows (exabeam+1, blog.gigamon, redcanary).

Instead of human analysts being the bottleneck, they become reviewers and governors of an always-on agentic fabric.

Critical Controls for Agentic Systems

If both offense and defense are adopting loops, the differentiator becomes how safely they are run. Three categories stand out.

Runtime monitoring and event-based state

Agentic systems need runtime protection that treats each agent interaction as an event, not as opaque state. Effective designs:

- Log all prompts, tool calls, file writes, and outbound requests as an immutable stream.

- Reconstruct current state by replaying events.

- Use detectors trained on “normal” agent behavior to flag anomalies (snyk+1, labs.snyk).

This is as much observability and forensics as it is security control.

Guardrails as code

Guardrails should be first-class artifacts in the loop, not just instructions in a system prompt. A simple pattern:

guardrails/

├── permit-paths.md # Allowed file system operations

├── deny-commands.md # Prohibited system calls

├── rate-limits.md # API call quotas per iteration

├── entropy-thresholds.md # Code complexity limits

└── behavioral-signatures.md # Expected agent patternsAgents are expected to read and update guardrail files as they learn. When they violate them, orchestrators should terminate the loop, isolate the environment, and raise alerts (dev+1, aihero+1).

Zero Trust for agents

Agents should be treated like untrusted microservices:

- Dynamic authorization per tool invocation.

- Strong identities for each agent instance and tool call.

- Network microsegmentation and strict egress control.

- Encrypted state to reduce lateral movement between agents (strata+1).

The more autonomy an agent has, the more its environment should look like a hardened, heavily monitored production service, not a shared dev box.

Economics and Skills: Closing the Gap

Ralph-style loops change the economics of both attack and defense:

| Aspect | Traditional | With Ralph-style loops | Effect |

|---|---|---|---|

| Malware development | Months, team required | Days, small team/solo | 10–50× faster |

| Defensive analysis | Hours per sample | Minutes via agents | 5–10× faster |

| Threat adaptation | Manual, slow | Continuous, automated | Real-time evolution |

| Overhead | High coordination | Orchestrated automation | 3–5× cost reduction |

If defenders fail to adopt comparable automation, the asymmetry favors attackers. But with agentic SOCs, continuous red teaming, and automated analysis, defenders can reclaim ground and even surpass what was possible in purely manual environments (reliaquest+1, thehackernews).

The required skill set shifts in parallel:

- Designing agent architectures and loops.

- Writing security-aware specs and prompts.

- Governing orchestration, not just individual models.

- Practicing adversarial ML to attack and harden agentic systems (linearb, gigamon+1).

Recommendations for Security Leaders

Near term (0–90 days)

- Identify low-risk, repetitive SOC workflows and implement them as tightly scoped loops (alert enrichment, artifact collection, initial containment).

- Add runtime logging and basic anomaly detection around any existing AI-assisted tools.

- Start capturing guardrails as versioned files, not just prompt text.

Medium term (90–360 days)

- Map all current and planned agentic systems to the AWS scoping matrix and enforce autonomy limits.

- Build dedicated red team loops that continuously test agentic systems themselves.

- Roll out agent identity and per-tool authorization; treat agents as first-class principals.

Long term (12+ months)

- Aim for predictive defense: continuous red teaming, self-healing infrastructure, and automated remediation loops.

- Push for shared standards on agent observability, guardrails, and supply-chain security for tools and models.

Conclusion

The Ralph Loop is not inherently offensive or defensive—it is a general-purpose automation pattern that amplifies whatever intent it is attached to. Early AI-generated malware frameworks like VoidLink and adaptive families like PROMPTFLUX show what happens when attackers adopt this pattern aggressively: development cycles collapse from months to days, and mutation becomes the default behavior (cloud.google+1, thehackernews+1).

The answer is not to avoid agentic AI, but to use it more safely and more effectively than adversaries do. SOC automation, continuous red teaming, event-based monitoring, and explicit guardrails can bring defenders to parity or better. In that world, security is no longer something bolted onto agents; it is a property of how loops are specified, orchestrated, and governed.

Additional Resources and Further Reading

- Mastering Ralph loops transforms software engineering with LLMs (linearb+1)

- Coding is Dead: Meet The “Ralph Loop” (youtube)

- Ralph Loops: A Simple Approach to Agentic Coding (linkedin)

- VoidLink: Evidence That the Era of Advanced AI-Generated Malware Has Begun (research.checkpoint / research.checkpoint+1)

- GTIG AI Threat Tracker: Advances in Threat Actor Usage of AI Tools (cloud.google+1)

- AI-based malware makes attacks stealthier and more adaptive (cybersecuritydive+1)

- 11 Tips For AI Coding With Ralph Wiggum (aihero / aihero+1)

- 2026 – The year of the Ralph Loop Agent (dev / dev+1)

- VoidLink Linux Malware Framework Built with AI Assistance (thehackernews / thehackernews+1)

- Open Source Malware Index Q4 2025: Automation Overwhelms Ecosystems (sonatype)

- EvilAI Operators Use AI-Generated Code and Fake Apps (trendmicro)

- How “Agentic AI” Will Drive the Future of Malware (scworld+1)

- Agentic AI in Cybersecurity (redcanary / redcanary+1)

- Agentic AI In Cybersecurity: SOC Automation Led by AI Agents (reliaquest)

- The Agentic AI Security Scoping Matrix: A Framework for Securing Autonomous AI Systems (aws.amazon / aws.amazon+1)

- Security-Focused AI Agents: Benefits, Capabilities & Use Cases (exabeam+1)

- Agentic AI and Cybersecurity: Threats and Opportunities (blog.gigamon / gigamon+1)

- Why Agentic Orchestration Is the Next Step in AI Security (snyk+1 / labs.snyk)

- 8 Strategies for AI Agent Security in 2025 (strata+1)